The first thing to know is what Terraform expects of the scripts it executes. It does not work with regular command line parameters and return codes. Instead, it passes a JSON structure via the script’s standard input (stdin) and expects a JSON structure on the standard output (stdout) stream.

The Terraform documentation already contains a working example with explanations for Bash scripts.

#!/bin/bash

set -e

eval "$(jq -r '@sh "FOO=\(.foo) BAZ=\(.baz)"')"

FOOBAZ="$FOO $BAZ"

jq -n --arg foobaz "$FOOBAZ" '{"foobaz":$foobaz}'

I will replicate this functionality for PowerShell on Windows and combine it with the OS detection from my other blog post.

The trick is handling the input. There is a specific way, since Terraform calls your script through PowerShell, something like this echo '{"key": "value"}' | powershell.exe script.ps1.

$json = [Console]::In.ReadLine() | ConvertFrom-Json

$foobaz = @{foobaz = "$($json.foo) $($json.baz)"}

Write-Output $foobaz | ConvertTo-Json

You access the C# Console class’ In property representing the standard input and read a line to get the data Terraform passes through PowerShell to the script. From there, it is all just regular PowerShell. The caveat is that you can no longer call your script as usual. If you want to test it on the command line, you must type the cumbersome command I have shown earlier.

echo '{"json": "object"}' | powershell.exe script.ps1

Depending on how often you work with PowerShell scripts, you may bump into its execution policy restrictions when Terraform attempts to run the script.

│ Error: External Program Execution Failed

│

│ with data.external.script,

│ on main.tf line 8, in data "external" "script":

│ 8: program = [

│ 9: local.shell_name, "${path.module}/${local.script_name}"

│ 10: ]

│

│ The data source received an unexpected error while attempting to execute the program.

│

│ Program: C:\Windows\System32\WindowsPowerShell\v1.0\powershell.exe

│ Error Message: ./ps-script.ps1 : File

│ C:\Apps\Terraform-Run-PowerShell-And-Bash-Scripts\ps-script.ps1

│ cannot be loaded because running scripts is disabled on this system. For more information, see

│ about_Execution_Policies at https:/go.microsoft.com/fwlink/?LinkID=135170.

│ At line:1 char:1

│ + ./ps-script.ps1

│ + ~~~~~~~~~~~~~~~

│ + CategoryInfo : SecurityError: (:) [], PSSecurityException

│ + FullyQualifiedErrorId : UnauthorizedAccess

│

│ State: exit status 1

You can solve this problem by adjusting the execution policy accordingly. The quick and dirty way is to allow all scripts as is the default on non-Windows PowerShell installations. Run the following as Administrator.

Set-ExecutionPolicy -ExecutionPolicy Unrestricted -Scope LocalMachine

This is good enough for testing and your own use. If you regularly execute scripts that are not your own, you should choose a narrower permission level or consider signing your scripts.

Another potential pitfall is the version of PowerShell in which you set the execution policy. I use PowerShell 7 by default but still encountered the error after applying the unrestricted policy. That is because the version executed by Terraform is 5. That is what Windows starts when you type powershell.exe in a terminal.

PowerShell 7.4.1

PS C:\Users\lober> Set-ExecutionPolicy -ExecutionPolicy Unrestricted -Scope LocalMachine

PS C:\Users\lober> Get-ExecutionPolicy

Unrestricted

PS C:\Users\lober> powershell

Windows PowerShell

Copyright (C) Microsoft Corporation. All rights reserved.

Install the latest PowerShell for new features and improvements! https://aka.ms/PSWindows

PS C:\Users\lober> Get-ExecutionPolicy

Restricted

PS C:\Users\lober> $PsVersionTable

Name Value

---- -----

PSVersion 5.1.22621.2506

PSEdition Desktop

PSCompatibleVersions {1.0, 2.0, 3.0, 4.0...}

BuildVersion 10.0.22621.2506

CLRVersion 4.0.30319.42000

WSManStackVersion 3.0

PSRemotingProtocolVersion 2.3

SerializationVersion 1.1.0.1

Once you set the execution policy in the default PowerShell version, Terraform has no more issues.

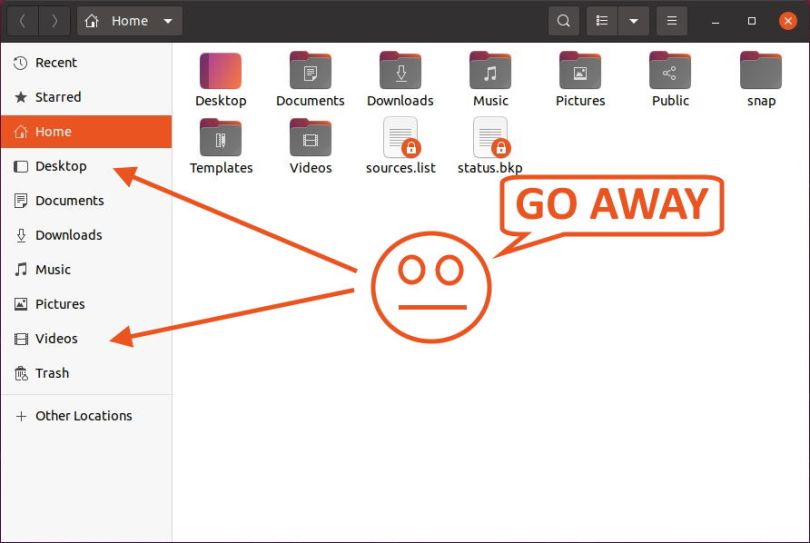

And for completeness sake, here is the Linux output.

You can find the source code on GitHub.

I hope this was useful.

Thank you for reading